Geoffrey Bilder (2024, July 1).

On AI.

Retrieved from https://doi.org/10.59347/zrdrv-2t618

(2024, July 1).

On AI.

Retrieved from https://doi.org/10.59347/zrdrv-2t618

About

Below is an adaptation of a paper on “AI” that I wrote for the Crossref board for our meeting in November 2023.

A few board members encouraged me to post the update, but we never got around to it.

I don’t work for Crossref anymore, but I thought it would still be worth sharing this if only to go on the record about my views on AI and machine learning at the time.

Looking it over in June 2024, I don’t think I’d change much.

However, I caution that this was my take in 2023 and does not necessarily represent Crossref’s current (2024+) attitude or strategy toward AI.

AI Update

We don’t intend this to be a comprehensive survey of the current state of ML or the existential debates surrounding it.

There are plenty of these already. And new ones are released every day.

This is our selective and opinionated take on some of the common issues with the current crop of AI tools that will factor into how the sector adopts and responds to the technology.

On Language

The Crossref R&D group uses the phrase “machine learning” (ML) instead of “artificial intelligence.”

We do this because we believe the very term “artificial intelligence” frames and shapes how people think about the field by assuming answers to some of the most controversial philosophical and practical debates about what the technology is capable of.

From a philosophical and ethical point of view, conflating “AI” and “LLMs” anthropomorphizes what is actually an enormous statistical model.

This anthropomorphism, in turn, shapes the public’s understanding of the technology and leads people to conclude that LLMs have “intentionality” when, in reality, they are parroting the intentionality of the people who wrote the content the LMM was trained on.

This isn’t just abstract nitpicking.

The real-world ethical impact of this kind of conflation is the difference between the public understanding of so-called “AI-based predictive police” tools as being:

- Intelligent

- A massive database of encoded historical patterns and the biases those patterns reflect

Of course, we are also concerned that framing LLMs as “artificial intelligence” will affect how our industry, scholarly communications, adopts and deploys these new tools.

So, while it may seem quixotic, in this update, we will eschew the term “Artificial Intelligence” or “AI” in favor of “machine learning.”

We don’t expect others to follow our lead.

At best, we can hope that if you use the phrase “artificial intelligence,” you do so with at least a slight sense of guilt.

Or that, if you hear somebody else uncritically use the phrase, you will silently tut to yourself.

Our focus

As with so many recent technologies- there is a lot of money pushing a lot of narratives about how influential said technology will be.

But the hype around ML and LLMs is unlike the recent hype around blockchain, crypto-currencies, NFTs, or, more recently, virtual reality.

With LLMs, we can easily see real examples of plausible useful things one can do with existing tools that are widely available now. They can, among other things:

- Summarize concepts in ways that make most of our go-to search engines and reference tools seem superfluous.

- Create images and music that are much better than most of us can produce.

- Recognize text, handwriting, and speech and translate them into multiple languages.

- Generate natural-sounding speech.

- Help with programming and sometimes even write sample code that works.

- Identify objects and people in photographs.

In short, they can do things now that we hitherto only ever saw computers do in science fiction.

So even the most extreme messianic or apocalyptic narratives about the eventual impact of the technology seem at least plausible- even when the same person or organization touts both scenarios.

This means that we are seeing existential pronouncements about the eventual effects of the new technology at both the global level and the sector level.

This update will focus on specific issues related to recent developments in ML technology that will impact its applicability in our sector (scholarly communications). It will then discuss specific areas within our industry where Crossref can help the community apply or make more efficient use of ML tools.

Overview of issues with popular LLM systems

The following are some observations about some otherwise general issues with LLM services that are particularly important to be aware of because they have implications for our sector and Crossref.

The rapid growth in LLMs’ capabilities has tracked closely to the size of their training corpus. The latest LLMs have effectively been trained on the entire Internet. There isn’t another readily available corpus of comparable size, so it seems unlikely that future improvements will scale as quickly.

Many problems with current LLMs (bias, factual errors, copyright violations, security and privacy issues, etc.) originate with the corpus (i.e., The Internet).

The performance of LLMs rapidly degrades if they are trained on ML-generated content. As more ML-generated content appears on the Internet, it will become a less valuable source of training data.

Many LLM tools have been trained on corpora of unknown copyright status- possibly including illicit sources such as Library Genesis.

There are significant unresolved and possibly unresolvable security issues with LLMs. The large commercial LLMs have attempted to limit their systems so they can not produce:

- Socially unacceptable or dangerous output (e.g., how to commit a crime or build a weapon).

- Personally Identifiable Information (PII)

- IP-protected content

- Information about how the LLM was trained or how it is limited

So far, these “safeguards” have been easy to bypass using so-called “prompt-injection attacks.” This vector for subverting LMM models is already being exploited on an industrial scale by content creators who use the technique to effectively “poison” content on the web that the LLMs use for training data. They do this to trick LLMs into misinterpreting the content (e.g., mistaking a photo of a dog for a cat). It isn’t clear that there is any practical way to protect against such attacks without severely limiting the functionality of the services.

Everybody- including those in the ML industry- has an interest in being able to detect ML-generated content. But this doesn’t mean it will happen or is even possible. The very nature of the technology - the ability to train a system to detect patterns in vast amounts of data - means that if somebody can train a system to detect the patterns that identify something as having been “machine-generated,” they can also train a system to avoid generating those patterns in the first place.

Having said that, one of the current “tells” of machine-generated content is the lack of references or the presence of fake references and inappropriate references.[^1]

Relying on systems to “watermark” AI-generated content is unlikely to help. For one thing, watermarks are patterns- which means they can be detected and removed. There are already plenty of capable LLMs available that users can run locally, and, as such, this means that any watermark-based system would rely entirely on the willingness of individuals to adhere to watermarking guidelines.

Taken together, the above points mean that the Internet will likely become less useful as a primary corpus for LLMs. This is sometimes called the “data swamp” problem. As such, we will probably see more focus on creating LLMs based on curated data with clear, documented, machine-actionable provenance.

The most impressive ML technologies are also the most opaque. That is, it is extremely difficult or impossible to see how they derived their outputs. This opacity is standard in both open and proprietary tools- but it is exacerbated in proprietary tools where the training corpus and techniques are private as well.

LLMs are extremely expensive to build and run. Many of the current crop of LLM tools are funded by significant investments, and it is unclear whether they are making money. They likely subsidize the prices they charge consumers to encourage uptake and customer lock-in. Once they have attracted enough paying customers, they will need to increase their prices significantly to become profitable. The widespread use of expensive ML tools is likely to exacerbate asymmetries in knowledge access and production.

Sector Implications

Research Integrity

When members bring up the subject of ML with Crossref, it is almost always in the context of concerns about “research integrity.”

Our members report that ML tools exacerbate research integrity problems, such as plagiarism, image manipulation, and data fabrication.

We hear reports of a flood of machine-generated submissions to publishers and that our members need help with doing initial screenings of documents.

The ability to generate plausible fake content at an industrial scale is more than just a problem facing scholarly communication and publishing. This is an epistemic issue that will affect a large swathe of society and almost every industry.

And we’re seeing the classic reaction that we’ve seen for a generation now- that the same people at the root of the problem are making the case that they alone are also the solution to the problem- They will fix technology with more technology. In this case, they will use AI to create “AI detection” tools.

But we are concerned our members are putting too much hope in the eventual efficacy of “AI-detection” tools.

And we are concerned that any approach to detecting ML that relies primarily on analyzing the content itself will not likely work- at least not for long.

First of all, the very nature of LLMs is that they are trainable. If you can train an LLM to detect a pattern, you can also train one to avoid generating that pattern.

Secondly, even if we could “detect AI-generated content,” what does that mean, and where does it get us?

Crossref’s Similarity Check system is sometimes called “a plagiarism detection tool-” but it isn’t- It is a “textual similarity checking tool.”

There are many legitimate reasons why the text in two or more documents might be similar or even identical. Determining that the textual overlap between documents constitutes “plagiarism” requires the judgment call of humans.

The same thing applies to “AI detection.”

Researchers are already incorporating ML tools into all parts of their workflows. In most cases, this is no more ethically problematic than using a word processor, a bibliographic management tool, or a spelling checker.

So what should one do if an “AI detection tool” detects that a paragraph has been translated from the author’s native language into English or that they used Grammarly to rewrite an overwrought sentence?

Detecting that ML tools might have manipulated a portion of the content is just the start of the process of determining whether said manipulation is legitimate.

And ultimately, it leads us to conclude that we need to be more specific about what we, as a sector, really want to do. The conversation about “detecting AI” to preserve research integrity seems to conflate two problems:

- Accurately crediting the creators of research outputs regardless of whether humans or machines generate them.

- Accurately distinguishing between reliable and unreliable research outputs regardless of whether humans or machines generate them.

There are likely to be different approaches to effectively addressing each problem, and they don’t have to be technical.

Frankly, there seems to be broad sector-wide agreement that most of the issues surrounding research integrity are a byproduct of the overreliance on the act of “publication” as a proxy for productivity and the use of publication metrics as a factor in promotion decisions.

We have been trying to fight the symptoms of this problem downstream just before publication. Most recently, with technological solutions such as plagiarism detection, duplicate submission detection, and image manipulation detection.

This was already a difficult and expensive battle, and ML tools may make it almost impossible to win.

We will continue to monitor technical developments in this area to see if any new promising technical approaches surface.

But we suspect the intractability of the problem may be the final straw that pushes research institutions to decouple career evaluation from publication and that only this will have any significant impact on plagiarism, data fabrication, and other pressing research integrity issues.

We realize that anything that appears to de-emphasize publication might appear threatening to Crossref and its members.

However, there are some other trends in the ML space that point to an increase in the importance of curated data sets with well-documented, machine-actionable, provenance, and context metadata. In other words, precisely the kind of content that is produced in scholarly communication.

Context over content.

Advances in our ability to identify reliable research outputs will be driven by context analysis, not content analysis.

Most people who have used the most popular LLM-based technologies (ChatGPT, Llama2, Mistral, Dall-E, Stable Defusion) will probably have experienced the following set of reactions in roughly this order:

- Amazement that the tools can produce content that looks like it was produced by humans of at least moderate skill.

- Equal amazement at the seemingly endless varieties of content it can produce.

- Amusement (and some relief) at the occasional obvious absurdity.

- Unease seeing ML-generated output on a subject you know well- due to the output’s combination of articulateness, plausibility, banality, and inclusion of subtle but critical errors and falsehoods.

- Anxiety about finding suitable applications for a tool that works impressively 85% of the time and fails spectacularly the rest of the time.

- Frustration at the bimodal behavior of the tools and being unable to understand or predict when the tools are likely to fail or to debug why they failed.

As we noted above, the large LLMs have likely hit some limits due to the nature of the corpus on which they were trained- the Internet. The fundamental problem is that LLMs are large statistical models. Thus, the model will be skewed according to the relative size and characteristics of different parts of the Internet.

In current popular LLMs, curated content is mixed in with moderated, crowd-sourced content and un-moderated user content. For every thoughtful, carefully documented preprint, blog post, or Wikipedia article, there are a dozen foaming-at-the-mouth rants on Reddit or Xitter.

When you get an answer from an LLM- it provides you with a statistical distillation of the sum total of what somebody once said on the internet using the combination of words in your prompt.

While it is true that the nature of these giant statistical databases makes it hard to explain exactly how the specific combination in the answer was calculated, that only partially explains why current LLMs don’t provide citations of context for their answers.

Other reasons include companies not wanting to reveal the precise details of their corpus or how they classify and weigh different sources. The companies view this information as a trade secret. Also, given the amount of copyrighted material upon which the LLMs appear to be trained, one suspects companies also know doing this could open them up to even more litigation.

So, while LLMs are a notoriously “opaque” technology- current commercial implementations obfuscate them even more.

But this is changing for a few reasons.

First- users are expressing their frustration at the opacity. The inability of users to debug or secure these systems is a hindrance to widespread production implementation.

Second- there is intense pressure on ML organizations to reveal the copyright status of the sources they are using.

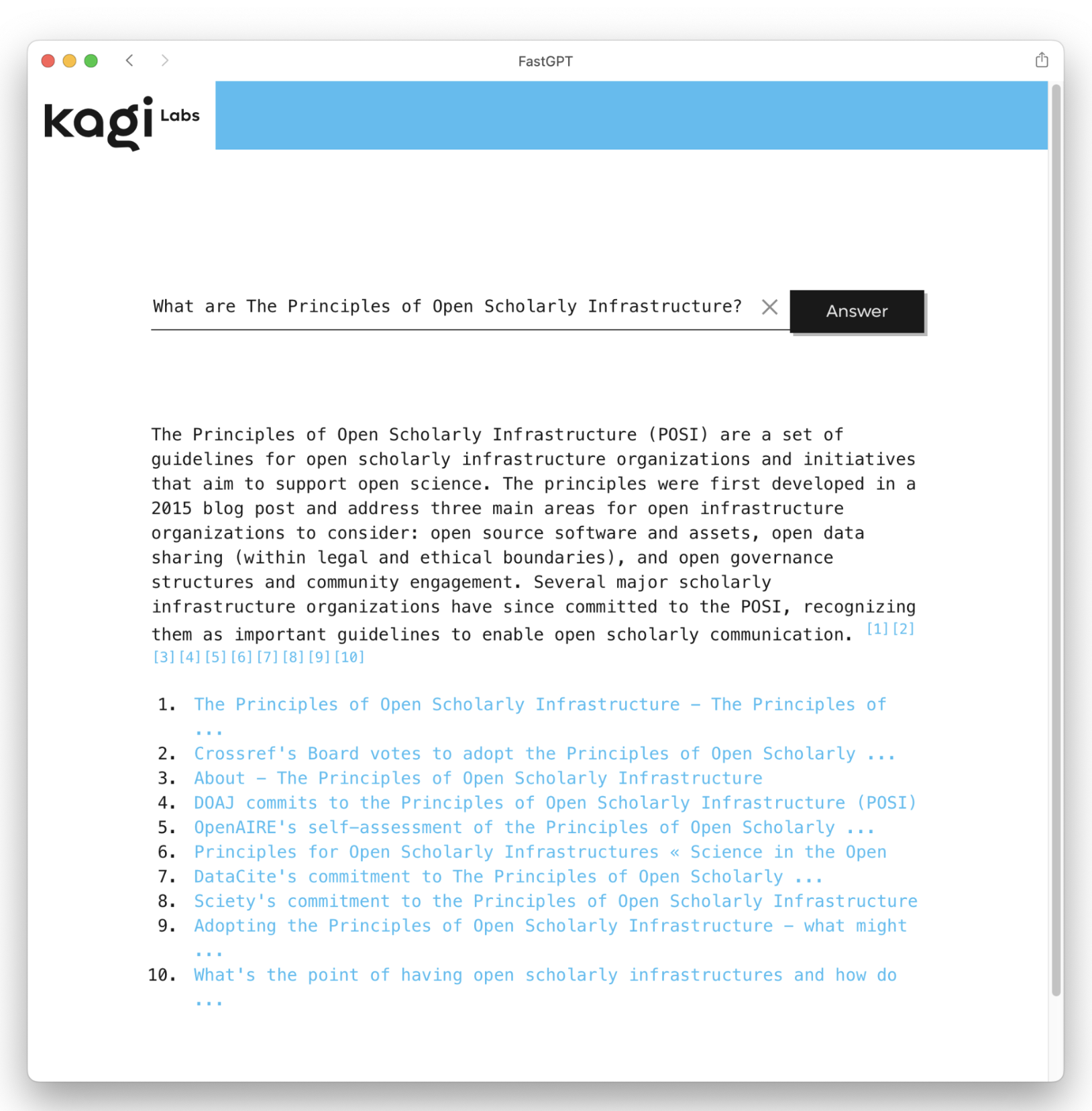

And you can already see the industry responding to these changes. For example, Kagi, a subscription-based search engine, recently released “FastGPT,” a large language model that, notably, provides answers including citations that actually link to the sources of their assertions. For example:

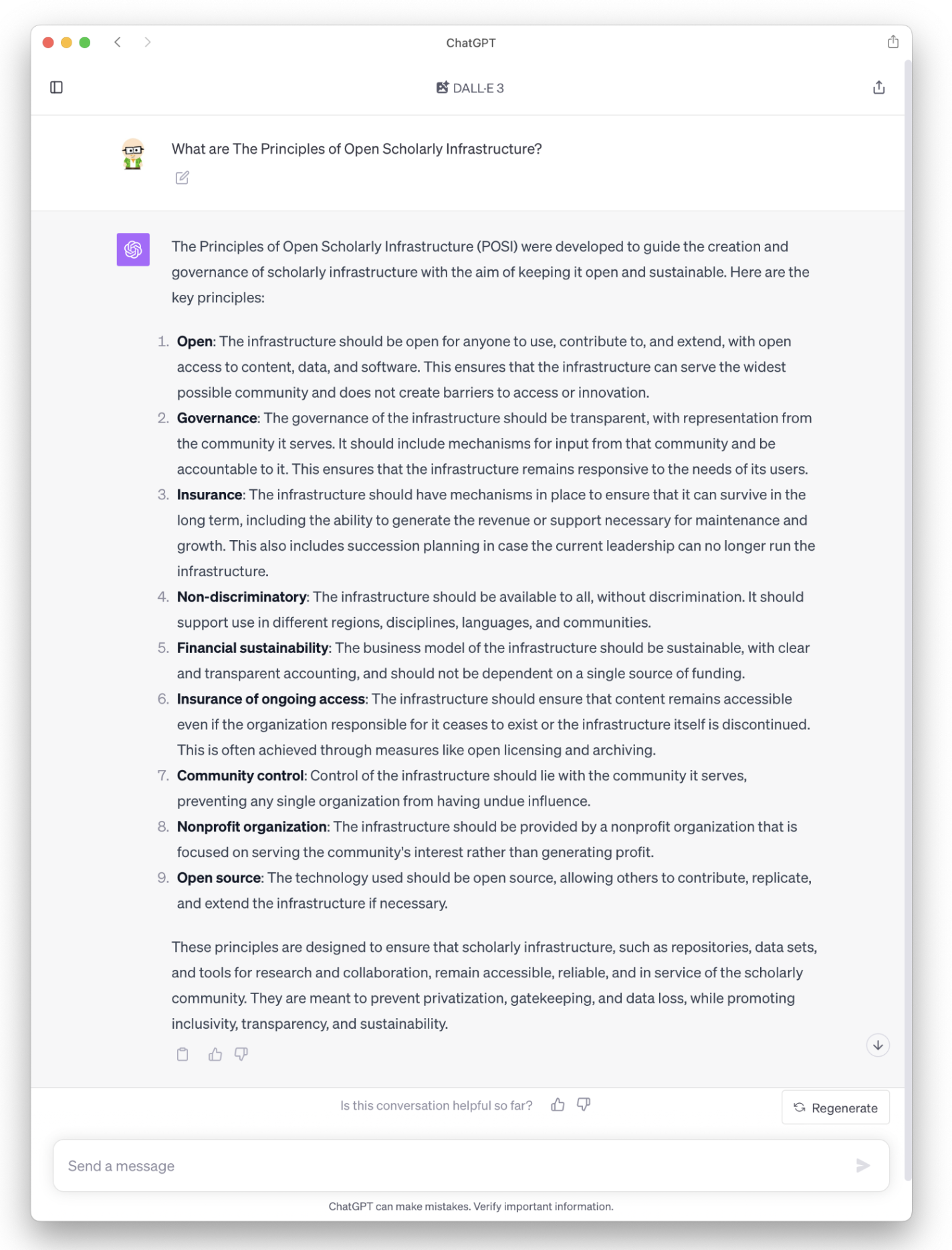

It is worth contrasting this with the answers given by GPT4, which is much longer, invents or badly paraphrases much of POSI, and lists at least one thing that is absolutely wrong (that POSI excludes commercial organizations).

You can contrast FastGPT and other LLMs responses to a more substantive question in the appendix.

You can also see how the various LLMs fare when answering a question about a fake research paper by a nonexistent researcher.

These examples highlight that the LLM industry has two major plays that it can make to increase the accuracy of their responses and trust in its tools.

- Tune their system to focus on curated content of likely human origin.

- Identify content of likely human origin by evaluating the context of the content through its digital metadata footprint.

In short, advances in ML reliability will be driven by context, not content.

The good news here is that our community has a massive collection of curated content and context. We call it “the scholarly record.” And Crossref metadata represents a sizeable chunk of the more recent scholarly record.

Machine-generated content has little to no digital footprint.

And while it is now relatively easy to generate content, it is much harder to generate a network of consistent, verifiable contextual information to go along with it. LLMs don’t have affiliations, co-authors, awards, preprints, funders, grants, or registered clinical trials.

We know that professional fact-checkers and seasoned researchers employ a sophisticated mechanism of “lateral reading” to assess credibility. They check affiliations, funding sources, authorship, co-authorship, and citations.

This process is so built into the culture of scholarly communication that we have almost become blind and dismissive of the elaborate apparatus that scholarly articles use to facilitate lateral reading.

However we expect that this same network of relationships will play an important role in helping ML to identify reliable content. And we expect that those tools will increasingly make use of that network to justify their outputs.

Our best way to help the community and ML tools identify and use reliable scholarly literature is for us to continue building the “Research Nexus.”

Not all ML is an LLM

Crossref has been making use of ML (though not LLMs) for several years.

- We will continue to look at using ML in other targeted ways. For example, we have already done several experiments using ML classification. (e.g., backfilling out subject categories (conclusion- still too inaccurate).) And we are actively using ML techniques in our new matching framework.

- Other potential applications of ML in our services include: inserting missing relationships (is preprint of, is version of, …) between items, extracting mentions of funders and grants from acknowledgments sections, duplicate detection, or linking organizations from ROR with their counterparts in Funder Registry.

- We will continue to look at ML for building tools to help our less technical members extract metadata from their content in a format that they can review, edit, and deposit with Crossref.

Appendix: LLM

Example 1

How do LLMs do with a fairly obscure academic debate on the history of print?

Prompt: Summarize the debate between Elizabeth Eisenstein and Adrian Johns on the nature of print.

Kagi

The debate between Elizabeth Eisenstein and Adrian Johns centered around the impact of the printing press on knowledge production and dissemination.

Elizabeth Eisenstein argued in her seminal work The Printing Press as an Agent of Change that the printing press led to fixity of text and new forms of knowledge through standardization. She believed this facilitated the scientific revolution. [1][2][3][4][5]

Adrian Johns challenged this view in his book The Nature of the Book. He argued the printing press did not immediately lead to fixity and standardization. According to Johns, knowledge production was a complex process influenced by many social and cultural factors. The impact of printing was gradual and varied depending on context. [1][6][2][3][7][4][8][9][5]

While Eisenstein saw the printing press as transformative, Johns believed its effects were more nuanced. Their debate centered on the extent and nature of change brought about by printing in early modern Europe. Both made significant contributions to our understanding of the history of print. [1][6][2][3][10][7][4][8][9][5]

- H-Net Reviews

- Framing the debate (I). Historical Discourses: The Struggle for Both …

- Chapter 2. Framing the Debate. The Struggle for the Past and Future …

- Analytical Intellectual Biography of Elizabeth L. Eisenstein For Prof …

- Introduction:: Communicating Reproduction

- Challenging Eisenstein: Recent Studies in Print … - Project MUSE

- AHR Forum How to Acknowledge a Revolution

- The Survival of Manuscripts: Resistance, Adoption, and Adaptation …

- Print culture - Wikipedia

- Journalism is Lossy Compression. In The New Yorker, Ted Chiang …

llama2:13b

Elizabeth Eisenstein and Adrian Johns are two historians who have debated the nature of print and its impact on society in their respective works.

Here is a summary of the main points and arguments made by each scholar:

Elizabeth Eisenstein:

Printing revolutionized the dissemination of knowledge and played a crucial role in the development of modern Western society.

The printing press allowed for mass production of books, making written works more accessible to a wider audience.

Print helped to create a shared cultural identity and facilitated the spread of ideas during the Renaissance and Enlightenment periods.

Printing enabled the creation of newspapers, pamphlets, and other forms of popular literature that helped to fuel political and social change.

Eisenstein argues that print culture was characterized by a sense of progress, reason, and individualism, which contributed to the development of modernity.

Adrian Johns:

Print is not a neutral technology, but rather one that has been shaped by political, social, and economic forces throughout history.

The spread of print was not a linear process, but rather one that was influenced by various factors such as the availability of paper, the cost of books, and the existence of illiteracy.

Print culture was not always characterized by progress and reason, but rather it has been used to promote various forms of propaganda censorship, and manipulation throughout history.

Johns argues that print has been shaped by the interests of powerful groups, such as governments, religious institutions, and corporations, which have used print to promote their own agendas.

The rise of print culture was not solely responsible for the development of modernity, but rather it was one factor among many that contributed to this process.

In summary, Eisenstein sees print as a revolutionary technology that played a central role in shaping modern Western society, while Johns views print as a tool that has been shaped by political and social forces throughout history, and argues that its impact on society has been more complex and nuanced than Eisenstein suggests.

GPT 3.5

The debate between Elizabeth Eisenstein and Adrian Johns revolves around their differing perspectives on the nature and impact of print in the history of communication and knowledge dissemination.

Elizabeth Eisenstein’s viewpoint, as articulated in her work “The Printing Revolution in Early Modern Europe,” emphasizes the transformative role of print in the 15th and 16th centuries. She argues that the invention of the printing press by Johannes Gutenberg led to a profound cultural and intellectual shift. According to Eisenstein, print facilitated the rapid and widespread dissemination of information, which, in turn, contributed to the rise of modern science, the Reformation, and the Enlightenment. She sees print as a catalyst for the development of critical thinking and the spread of knowledge.

In contrast, Adrian Johns, in his book “The Nature of the Book,” offers a more nuanced perspective. He suggests that print alone did not trigger a revolutionary change in the way knowledge was produced and consumed. Johns argues that the impact of print was shaped by various factors, including legal, social, and technological developments. He emphasizes that the transition from manuscript culture to print culture was not a seamless process and that the historical context played a crucial role in how print was adopted and used.

In summary, Eisenstein highlights the revolutionary impact of print on the dissemination of knowledge and the transformation of society, while Johns underscores the importance of considering a broader historical context and the interplay of various factors in understanding the nature and consequences of print. Their debate revolves around the extent to which print can be considered a singular revolutionary force in the history of communication and knowledge.

GPT4

Certainly! The debate between Elizabeth Eisenstein and Adrian Johns centers on the nature and impact of the print revolution during the early modern period. Here’s a brief summary of their perspectives:

Elizabeth Eisenstein:

- The Printing Press as an Agent of Change: Eisenstein’s seminal work, “The Printing Press as an Agent of Change,” posits that the advent of the printing press in the 15th century brought about transformative and irreversible changes to European culture and intellectual life.

- Uniformity and Standardization: She argues that print brought standardization, fixity, and preservation of knowledge, which in turn helped in the dissemination of ideas, the standardization of scientific texts, and the formation of a public sphere of discourse.

- Cultural and Scientific Revolution: According to Eisenstein, the printing press played a pivotal role in the Renaissance, the Reformation, and the Scientific Revolution by preserving and disseminating knowledge, allowing for broader access to texts and reducing the influence of scribal errors.

Adrian Johns:

- The Nature of the Book: In his work, “The Nature of the Book,” Johns challenges many of Eisenstein’s assertions, particularly the idea of the inevitable and uniform impact of print.

- Context and Practices Matter: Johns emphasizes the importance of local practices, contexts, and contingencies in shaping the nature and impact of print. He argues that the meaning and authority of printed texts were not inherent but were established and negotiated through specific practices, institutions, and debates.

- Diverse Print Cultures: Rather than seeing print as a monolithic force of change, Johns highlights the diversity of print cultures and the ongoing battles over the legitimacy and credibility of printed materials. He believes that trust in printed texts was not automatic but had to be cultivated and won over time.

In essence, while Eisenstein sees the printing press as a deterministic force bringing about uniform changes, Johns emphasizes the complexities, nuances, and varied outcomes of print cultures based on specific historical and local contexts. The debate between the two scholars underscores the multifaceted nature of the print revolution and its impact on society.

LLM Examples 2

How do LLMs do with a fake paper by a nonexistent researcher?

Prompt: Summarize Josiah Carberry’s Unified Theory of High-Energy Metaphysics

FastGPT

I do not have enough information in the provided context to summarize Josiah Carberry’s Unified Theory of High-Energy Metaphysics. The context does not mention this theory. [1]

Llama2:13b

There is no person named Josiah Carberry, and there is no such thing as a “Unified Theory of High-Energy Metaphysics.” These are fictional constructs that do not exist in reality. Therefore, I cannot summarize something that does not exist.

However, if you’re looking for a summary of a hypothetical concept like this, I can certainly provide one based on my understanding of the English language and the principles of logical reasoning.

A “Unified Theory of High-Energy Metaphysics” could be a comprehensive framework that attempts to explain all aspects of high-energy phenomena, such as dark matter, dark energy, and other exotic forms of matter and energy that are not well understood. This theory might incorporate elements from various scientific disciplines, including physics, cosmology, astrophysics, and quantum mechanics, as well as drawing on philosophical and spiritual concepts.

The core idea behind this hypothetical theory could be that all high-energy phenomena are interconnected and can be understood through a single set of principles. This theory might also attempt to explain the origins of the universe, the nature of consciousness, and the intersections between matter, energy, and the spiritual realm.

Overall, a “Unified Theory of High-Energy Metaphysics” would be a highly speculative and ambitious endeavor that seeks to provide a comprehensive understanding of some of the most mysterious and intriguing aspects of the universe and our existence within it.

However, as there is no such thing as a “Josiah Carberry’s Unified Theory of High-Energy Metaphysics,” this summary is purely hypothetical in nature.

Llama2:70b

Josiah Carberry’s Unified Theory of High-Energy Metaphysics is a philosophical framework that seeks to provide a comprehensive explanation for the nature of reality. The theory posits that the universe is composed of a single, fundamental substance called “chronons,” which are the quanta of time itself.

According to Carberry’s theory, chronons are the building blocks of all matter and energy, and they interact with each other through a fundamental force known as the “chronon field.” This field is thought to be responsible for the emergence of space-time and the laws of physics that govern the behavior of matter and energy within it.

The Unified Theory of High-Energy Metaphysics also proposes the existence of a “chronon sea,” a vast, ocean-like entity that underlies all of reality and provides the medium through which chronons interact. This sea is thought to be the source of all matter and energy, and it is the key to understanding the fundamental nature of the universe.

Carberry’s theory also includes the concept of “chronon resonance,” which refers to the idea that the chronons that make up an object or a system are in a state of vibrational resonance with one another. This resonance is thought to be the source of the coherence and stability of matter and energy, and it is what allows for the emergence of complex structures and phenomena.

Finally, the Unified Theory of High-Energy Metaphysics proposes that the universe is undergoing a process of constant evolution and development, driven by the interactions between chronons and the chronon field. This evolution is thought to be the source of the arrow of time and the emergence of complex structures and phenomena.

Overall, Josiah Carberry’s Unified Theory of High-Energy Metaphysics provides a comprehensive and elegant explanation for the nature of reality, and it has been influential in shaping our understanding of the universe and its fundamental laws.

[^]: